Tips

This article covers all tips to apply to make sure your app works consistently and efficiently.

Use Blue, not Relay

If you're using the Lacewing Relay protocol, use the Lacewing Blue set.

The Blue object set has been steadily being stabilized and has extra features. And remember, all the bugs in Relay won't be getting fixed.

Make sure you update the Blue objects at the Darkwire extension list. Later versions even have an update notification system, although it's only active in the editor, not in built EXEs.

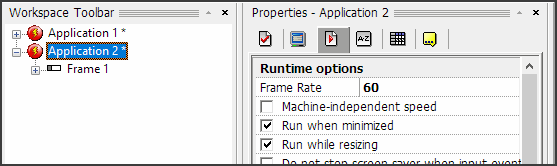

Enable run while resizing/minimized

Or your app will stop syncing.

These settings in app properties, if disabled, mean the Fusion runtime will pause while the app is minimized, which is not desirable.

If Lacewing is in single-threaded mode and the Fusion runtime pauses, you'll get disconnected from timeout, and in multi-threaded mode (Blue only), you'll get a backlog of queued messages to process. So make sure both of these are ON.

Run while resizing occurs in other circumstances than resizing, like when you are dragging the window around the screen.

Multi-threaded is preferred

Enable in Blue object properties. Only available on Blue.

Both single-threaded and multi-threaded will perform well, but single-threaded means Fusion's runtime thread will have to wait for Blue to do everything Blue needs to, even if the stuff it needs won't cause any events, like responding to a ping message.

This is particularly important in Blue Server, as disabling event triggering using the Enable Condition set of actions means that more can be handled on the Lacewing thread in multi-threaded mode.

More details on how the threading system works are described here, under the properties of Bluewing Client.

If you are getting bugs in one mode and not in another, report it, don't just switch.

Pausing apps

Pausing apps shouldn't be done, as it will cause either a timeout, or a backlog of queued messages to process. Consider disconnecting when pausing, or limiting the period you can pause for.

Alternatively, using a fake pause, not the built-in one that freezes the event loop, can be done instead. Common ways of doing this "fake pause" are putting your game code into groups of events that you deactivate, and having code to pause all movements. Visibly, the game appears paused, but in the background, it keeps going.

Some coders use Surface Object and its Blit From > Advanced > Window Handle action, with the FrameWindowHandle("Surface") expression, to create a screenshot of the frame. Then they display the captured screen inside the Surface over their frame, so people see the screenshot instead of the objects animating behind it.

Don't duplicate the Client/Server objects

If you read the how the global objects work in the When selection is changed article, you'll find out why you shouldn't have duplicated Client objects, whether they share the same global ID or they're both local, separate objects.

Firstly, you'd have to add conditions for each triggered event to confirm the right object is being created.

Secondly, for shared global objects, you'd have one-off events generated more than once; e.g. a On Channel Leave of "TestChannel" will run for each shared instance. That's a useful feature if you're sharing the object's connection with another global object in a sub-app, as you can get On Channel Leave triggered for both the running app and its sub-app, but it becomes very confusing if you've left the same channel twice when that's not possible by Lacewing spec.

Don't read using the cursor in conditions

Due to the cursor expressions moving the index even if the condition is false, you will really confuse yourself using cursor expressions in conditions, as you'll have to mentally keep track of all possibilities of where the index could be.

Just use the non-cursor, explicit index expressions in conditions instead.

Prefix channel names

This is covered more under Unique channel prefixes.

If you're using a public server, or your own server and want to share it for multiple of your apps, read that article.

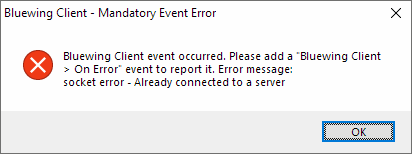

Add Error, Disconnect and Denied conditions

This one should be done in all apps, including ones you don't intend to publish.

Lacewing Blue Client requires Fusion events to have processed Error, Disconnect and On XX Denied conditions, otherwise you will get a Mandatory Event Error message box like this:

Blue Client:

Add all the Denied conditions that you try to get approved. Even if you don't deny it in your server's Fusion code, Blue has built-in things that will deny events, such trying to set name to blank or a name being reused.

So, add On Error, Connection > On connection denied, Connection > On disconnect, Name > On name denied, Channel > On channel join denied, and Channel > On channel leave denied.

If you are using a public server, listen to Sent Text Messages from Server directly to you, on subchannels 0 and 1; they will report why you're being kicked if you misuse the server. And always read the Welcome message, as they set up conditions under which you can use the server – you may get banned from public servers if you ignore them.

Blue Server:

You should listen to On Error at the minimum, because it won't just report clients misusing, but also clients being disconnected for inactivity, and your own server code trying to do things it can't.

If you're concerned about speed of the server responding to all those events, worry not – you can disable most of the triggered events, or even set up automatic responses at runtime using the Enable Conditions actions.

Code for multiple errors

It's possible one event can generate multiple errors, so you should append the error texts together, or report in such a way multiple errors can be seen at once.

Don't test for the built-in tests

Checks for re-used names, blank names, all-whitespace and so forth are checked for by the Server by default, so you won't have to check them. On the client side, Blue Client is robust in checks, but Relay Client is much less so.

Before you do checks like "is a client trying to join a channel they've already joined", "is a name too long", "is Adam trying to join the no-Adams channel", ask yourself whether a generic, non-custom Lacewing server would have need to check that.

If your conclusion is yes, feel free to open a Tester app linked in the How to debug your app topic, and test out your theory.

(In the above example, the first two check scenarios are generic, as any Lacewing server could have that happen. The third is specific to an app that sequesters the Adams away from the peasants.)

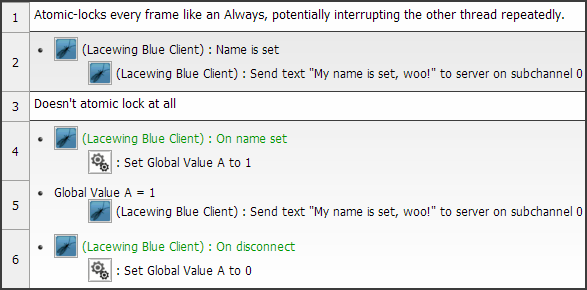

Minimize the use of Lacewing non-triggered conditions

Try to avoid using non-triggered Lacewing conditions outside of triggered events.

In Blue, all the conditions test the underlying data, and are protected from interfering with the other threads by using an "atomic lock"… which is relatively fast, but will still pause other threads if they try to lock. The pause will thus be slower than storing the condition's result elsewhere and using it there.

For example, instead of using the Name > Client has a name condition, add a Name > On name set event and a global value.

Select by ID, not name

A client/channel ID is a two-byte unsigned short, and no matter how many clients/channels, it will always be a two byte number.

A name comparison first simplifies the name as described in Unicode notes, then compares code point by code point until it finds one that is different to what it is looking for. To successfully match and find it, it must loop through every code point until it has fully compared.

Selection by ID, which uses two bytes regardless of channel ID, is much faster than selection by name, which varies and requires a sub-loop.

Blue Server has features for selecting a channel by ID, as of this writing, but these aren't accessible in the A/C/E menus.

In Blue, as a performance booster, the names are stored twice per client/channel; one for reading in selected name expressions, and one as a pre-simplified name so a selection by name action doesn't need to simplify both the metaphorical needle and each haystack every time.

However, despite that boost, selecting by name is still not going to be faster than selecting by ID.

As a side note, all messages in the Lacewing protocol use two-byte IDs, not names. The only exception is when the name is first introduced; namely, name set, channel join, peer connect, and peer name change messages.

Bandwidth is performance

Messages being processed are CPU time. CPU time is used for everything, including refreshing the display, processing the Fusion event sheet, and processing internal operations of extensions like Bluewing.

Use binary messages

Binary messages are very useful to make messages more efficient to send, write and read back. How they work is covered in Types of messages. You can easily gain a ton of bytes saved in your messages.

You can also compress binary messages using zlib, if you're sending big binary chunks (1024 bytes or more).

You should read the Examples topic, specifically the compression part, if you're compressing and/or using parts.

Don't use Always

Don't use an Always > Bluewing Client: Send To Channel/Peer to update your position.

Many tutorials will tell beginners to use it, simply because it's easier, and hides the fact there's timing problems, but it is overkill.

While this limit isn't hit often, Bluewing has a cap on how many events it will run per second, or more accurately, how many events it will run per frame.

This is normally 10, which in a standard 60FPS app, will give you a whopping 600 events it can process per second.

If you're using Always > Send Position, as soon as you hit 11 players (10 peers x 60 messages each) the limit will be overloading, resulting in an error like this:

"You're receiving too many messages for the application to process. Max of 10 events per event loop, currently X messages in queue."

(On a side note, this error does not occur if the queue overloads as a one-off; it must happen multiple times before the error is reported. It can happen during a long frame transition as events couldn't be run without an Extension to run them on, resulting in a long queue backlog building up.)

Don't just try to boost the limits

If you boost the frame rate so more messages can be processed, it won't fix it, because Always matches the frame rate, and so your 120FPS app will send 120 messages instead.

It's very easy on the C++ side to boost the limit to more messages per tick, but that misses the point: even if nothing client-side is complaining about the load, the server is heavily loaded as well.

In the scenario described above, 600 messages are being received by each client. Since the server is sending all of those 600 messages, for each client, then multiply it by 11 clients, and you get the server is sending out 6,600 messages. That's for a single game.

Two games of 11 players each puts the server instantly into five-digit range, having to upload 13,200 messages and download 1,200 messages each second. Obviously, that's not sustainable.

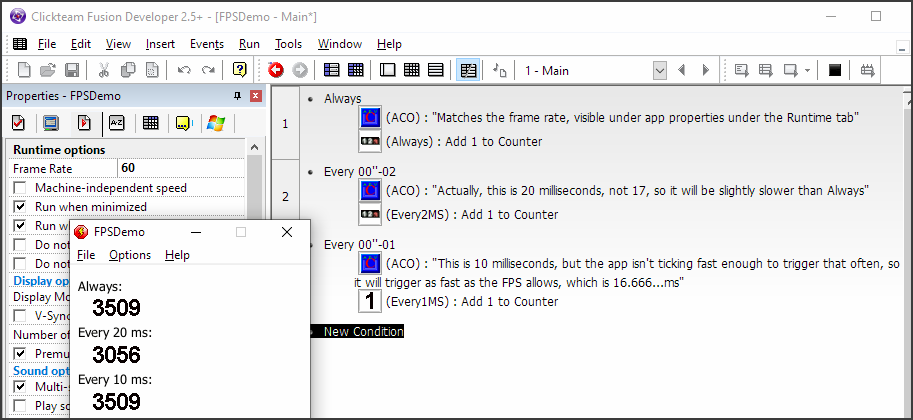

Understand the app timer

Try to understand what your app is actually doing in ticks. 60 messages won't be processed every time the frame displays, because there is no guarantee that between every tick, exactly one position message is received. Often, due to being out of sync, there will be zero position messages in one tick, and two position messages the next tick, just because the receiving OS took slightly longer than usual.

In the example above, a framerate of 60 FPS means 60 frame ticks every second, so 1.0 second ÷ FPS, which is one tick per 0.01666… seconds, or 16.666… milliseconds per tick. We'll round to 17ms.

The app timer can take some getting used to, but here's an example:

Notice the frame rate on top left, set under Runtime tab of application properties.

Always runs every 16.67 milliseconds, or 1.6/100th seconds, as mentioned before.

You'll notice the Every 10 milliseconds doesn't run faster than the Always, because the app doesn't tick fast enough to do it that frequently. You could easily make it run as expected by boosting the frame rate, but it would burn more CPU.

The general practice with FPS is to do it only to match the display rate of the screen (60Hz = 60FPS, sometimes 120Hz or 144Hz). Expert Fusion users will have the frame rate as a high number and use delta time to calculate the exact timing between each tick and move things by multiplying their movement by time between last tick and now as the clock would show, rather than moving the same distance every tick regardless of whether the tick is slightly delayed.

Note: the 1/100 second unit, used in Every X condition, is hundredths of a second, but milliseconds are thousandths of a second. You can in fact only type in 17 milliseconds by pressing the "Use a Calculation" button and putting in 17.

So, going back to why you shouldn't use Always, why not use something like Every 5/100 seconds, which is plenty frequently enough and you've already made it 31.25x easier for the clients and server to process.

If your display is now juddering slightly, then you might want to smooth over the gaps between position updates, but fortunately, there are two main ways of doing this:

Send what changes; send what isn't known

When designing an app, instead of constantly sending data, even if it doesn't change, send only what changes instead, or is otherwise different from the last message.

For example, you don't need to send the position constantly if the player has stopped; you only need to send the position they stopped at, in a unique message that says they stopped.

One design method has the client apps not respond to keys directly at all, and simply tell the server what button was pressed, and let the server send back where their player object is and what it's doing. In effect, the server does the "on player pressed x" and moves the object, then messages the player back with the new movement/position.

This method should only be used by experts, as it puts a lot of weight on the server, and is usually overkill unless the app is very finely timed and the server needs to compensate for microseconds.

Instead, simply have the players tell each other when they change direction, and use an infrequent position update; every 0.05 seconds (20 msgs/sec) is usually more than sufficient and won't easily overload the client's event trigger limit discussed above.

20 msgs/sec requires around 31 clients on a 60FPS app before they have too many messages for the built-in limit of 10 events per tick.

Predict the movements

Have your client predict where the other clients are, by having each client app send not just their player's position, but the direction, and the speed. That way, the other clients can move their peers to go that direction at the right speed, and in the gaps between position update messages, the peer will be moving properly. Use something like Bouncing Ball movement instead of 8-Direction for peer objects.

You can consider using a method called Dead Reckoning (wiki), which uses math to calculate the position of an object given just position updates.

Dead Reckoning basically predicts by difference over time, using as little as two messages.

For example, take coordinates (10,5), and (20,5), received 1.0 second apart; DR will calculate that the next message should be (30, 5) in 1.0 seconds. So DR will then conclude each 0.1 second, X should go up by 1, and at the time the 3rd message should be received, the position of the object should be (30, 5).

In practice, a basic prediction like that works most of the time, but ignores subtler things like acceleration, and gets easily confused when changing direction.

That's the downside of predicting movements: when the player changes direction, the predicted location is quite a bit off from where the player actually is, when the new direction message comes through. That results in the player suddenly jumping. For example, if the player is going downwards, then switches to strafing right, the player will jump from a low position to higher up and further right.

You can do some smoothing of direction changes, perhaps with something like Easing, but ultimately the key thing is improving performance so the new position jumps are very minor.

You can also reduce the jump by immediately sending when the player direction keys being held down change, instead of just sending the current direction/speed Every X/100 seconds. That way, the new direction message will be received as fast as possible.

It may be worth dedicating a subchannel to changes of direction; some games only use changes in direction, and don't regularly send their position. Find what system works the best for you.

An advanced Dead Reckoning algorithm, with built-in smoothing when directions/angles change, was implemented in a Fusion object called Dead Reckoning (you can get it here).

For obvious reasons, you should implement peers' collision with terrain on the client-side too. You don't want peer players going downwards and phasing through a wall on screen, before the message saying that they've stopped when they hit the wall comes through and they snap back up out of the wall.

Expert: MMO, merge messages on server-side

This is for experts only. If you're confused by the terms here, you're probably not an expert and could do with taking it slowly. Jumping in the deep end when you can barely swim in the shallow will just drown you and demotivate you when the code confuses you for too long.

If you're familiar with binary messages, you could know that you can arrange the data in them how you want. While this is an unusual method, it helps with saving processing time and speed immensely, in bigger games where you don't want to flood a ton of tiny messages.

Consider the scenario where you have a ton of players on a huge MMO map, we're talking hundreds of players and a map that spans maybe ten screens' worth side by side. You don't want to just be sending thousands of position update messages, blindly transferring them even when the players concerned aren't even going to be displayed anytime soon, but what is the alternative?

Well, having the server creating a binary message Every X/100 seconds, the message being a list of peer ID and data associated, and sending that message from the server to a channel containing all active players, rather than having all the players messaging each other.

The process breaks down to this:

- Loop all clients.

- Find clients near the client selected by the main loop; near by x/y position, taking into account their screen size and a healthy margin around it.

- Add nearby client count to binary message.

- Loop through all those nearby clients.

- For each nearby one, add to binary message their Peer ID, X/Y position, direction/angle – whatever your game needs.

- Once the nearby clients sub-loop is over, send the message to the main-loop client.

And on client-side:

- On message received, read back the nearby client count from binary message.

- Start a loop (the read nearby client count) times.

- In the loop, read Peer ID.

- If there are new peer IDs, create peer objects.

(See creating player objects example.) - Select the peer object with the peer ID matching, and read the X/Y position, direction/angle – as above in step 5.

(See selecting player objects example.) - If some peer IDs are missing from the last message, destroy the peer objects that correspond to it.

And that's all there is to it.

On the server side, instead of having visible objects on-screen (thousands of individually moving objects will slow down the app immensely), try keeping peers grouped as one big list, instead of individual objects.

You will need a way to have a list of peer IDs, X/Y etc; for example, using the Binary object. A binary object allows you to buffer-copy directly from received position update messages, and buffer-copy back into the messages you're building to send to clients, using the memory address and size features. (Don't forget to resize the message to send before buffer-copying into it from a Binary object, though.)

But ultimately, you have a much faster system sending a lot less messages out, and ultimately processing a lot faster. It's also very simple to move clients between servers, by having the servers handle different parts of the large map; RuneScape does that, for example.

You will need other messages handling one-offs, like when a new peer joins, sending their name if necessary; if a peer changes equipment, sending out a message about that – to nearby peers, but also sending it when one of those peers gets close enough. You don't want clients across the map aware of details they don't need to know – partially because modified clients will have a forewarning about what their enemies have equipped before they're on screen (akin to stream sniping), and partially because it's wasted bandwidth.

Word of caution

Unless you're able to get a cloud server and hyper-optimize your apps, don't expect a smoothness matching your favorite AAA game like Rainbow Six Siege. The best you can get is handling the big things like damage server-side, handling the display things like peers' collision with terrain on client-side, and optimizing things to use binary messages and buffers as described above.

Fusion is not C++, it's not the fastest language out there, but until you're hitting hundreds of players on screen, you shouldn't be having issues with speed on the client side.

On the server side, there are always tricks to make things faster, and ways of dividing server load up by connecting clients to different servers, and having the servers communicate with each other.

Big games are a balance; too much work done server-side makes the server too slow for everyone, too little done makes it slower client-side or easy to exploit. Too many checks for exploits server-side make the server too slow; too little makes it a game that's unplayable for legit players due to the abusers making it an unfriendly environment.

It may not mean much to you if you die twenty times to Hacker McGee before you finish typing the ban command, but for someone who's sweated and micro-optimized their gaming to improve their kill/death ratio, that could mean the difference between quitting and staying on.

And if there's a risk of 5% of legit players being accidentally caught up in the new auto-ban code, you should seriously consider that you don't know how much those 5% are the central players that are the life of their guilds, or how many of that 5% are sinking their real-world money into upgrades, or how many of that 5% have a large following or are streamers, etc.

Always test measures you take thoroughly, use a beta group that can try it out first and report issues, make sure you understand as many edge cases as possible; slowly deploy it to the public (one server at a time, perhaps), and try your best to make things logged and reversible, without sacrificing performance.

Fusion is great for code time to output result ratio. At some point though, Fusion will become something that requires too many workarounds to be feasible; but a good Fusion developer would be able to run a MMO for years with an increasing client base before they hit the point where it is actually better to rebuild from scratch, outside Fusion.

And remember; if server speed is your biggest concern, there is an open-source pure-C++ version of Bluewing Server, bluewing-cpp-server, written by the creators of Lacewing Blue.

Don't forget to also read the Security article, and the rest of the tips for performance.

Bots or AIs

If you're doing AIs, make sure one client or the server is the master of telling what the AIs are doing. Predicting on all clients what player the AI is chasing is a good idea, but remember there may be just a few pixels off on one screen than another, making an AI chasing the closest player on their screen, and a different closest player on another screen.

This is where the master comes in. It doesn't have to be the built-in channel master, although that's an option; it just has to be a peer that tells all the other clients what the AIs are doing.

Each of the clients should still predict the AIs individually, including terrain collisions, to result in a smooth-looking game because the AIs respond instantly on their screen. (In events, if AI collides with wall, stop AI.)

But in edge scenarios where the AI is equally close to two players, which player do they target? Well, each client guesses for themselves based on what they have on their screen… at least until the master's message comes through saying who the AI is targeting, and where the AI is currently when chasing that player.

On a side note, the new Scope Control object (Fusion 2.5 only, not Fusion 2.0) is excellent for finding the nearest enemy to a player. There are ways to do it without an extension, using a doubly nested foreach loop, but much slower and harder to do.

Compression tips

Tips on using the zlib compression (or other compression algorithms) with Lacewing effectively.

Don't re-compress

Attempting to re-compress a compressed message will work, and will require two decompression actions on receiving end as you might expect, but the second compression will make the message bigger, as there are no more patterns to find.

So don't compress files that are already compressed; such as PNG, which uses zlib internally, JPEG which uses a lossy JPEG compression, and ZIP/RAR/7z, which are compression archives. Video files are likely also highly compressed, on the off chance you're sending those.

Similarly, in Lacewing, don't use zlib with other compression algorithms such as LZMA2 Object; you can use either algorithm on its own – LZMA2 has a superior compression ratio usually, at the cost of more time needed – but all lossless compression algorithms try to find patterns, and regardless of which algorithm you use first, the second algorithm will not have any patterns it can find, and will end up making it bigger.

Compress the biggest data

You'll see significant size savings if your binary message is mostly or entirely a long text; otherwise, zlib will try to find patterns in the bytes, which will usually get a better compression for a bigger message.

If your message is too short, less than 100 bytes as a rule of thumb, zlib won't be able to find patterns, and will compress poorly, making the message bigger.

If you're sending in parts, compress outside of Lacewing. See the Examples help topic.

Note that Lacewing Blue is capped to around 250MB in its decompression, because Fusion apps have limited memory; attempting to decompress a message that will turn up that size will result in an error.

This is also a measure to prevent a hacker sending a modified message that pretends it will decompress to a huge amount of memory, causing the receiving Client to reserve a huge amount of memory and fail to do so, probably causing a crash. Note Blue is also coded to handle not having enough memory to decompress.

Compression speed

In terms of speed, while the compression is very quick, generally fractions of a second, it's not instant, so you may need to do some performance tests to see if compressing slows your sender/receiver app too much to be worth it.

The compression is synchronous, so your app will pause until the compression completes; normally this isn't visible, but could result in a small visual stutter, so you probably don't want to be doing it in a fast-paced game.

Compress as last operation before send/blast

While it's possible to add more to the binary to send after using compression, the decompression will fail as it will try to decompress the whole message, including the part that wasn't originally compressed.

You could use the Binary object and Memory object to copy out the compressed section, as Binary Object can also run a zlib decompress… but at that point it's getting unnecessarily complicated.

The resulting message

Note that the first thing in a zlib-compressed message is an unsigned integer (32-bit) containing the original uncompressed size. Of course, the compressed size can be read with the Received > Get binary size expression.

Some older versions of Lacewing Blue had a decompression error from not having that integer, causing an "Error with decompression, inflate() returned error -5. Zlib error". If you're getting decompression errors with more recent Blue, or with Relay, you're not compressing the message fully.

Use the right handling method

If you're using Blue Server, make good use of the Enable/disable conditions actions.

The performance of the handling methods is covered more under those actions' help topics.

If you're tempted to use the slowest "wait for Fusion" handling method, you should only do that if you need the server to run events every time to decide whether it's okay for approving the request.

Sometimes this will be the case, but if the server just has a "allow/deny" checkbox in the UI, or other simple condition, you'd be better off switching the mode of handling when the checkbox is changed, and setting the deny reason as appropriate.

As a second example, if you want a maximum of 32 clients on your server, it's best to set the handling method to auto-deny after the 32rd client connects, and set the method to auto-approve when one of the 32 clients disconnects.